OpenAI Announced O3 and O3 Mini – What Makes O3s Different

Artificial Intelligence has captured our imaginations with its potential to solve real-world problems, and OpenAI has consistently been at the forefront of AI innovation. With their latest announcement, the O3 and O3 Mini models, the AI landscape is about to undergo another major transformation.

As someone deeply fascinated by advancements in AI, I couldn’t help but take a closer look at this announcement. These new models promise to push the boundaries of reasoning, mathematics, and coding benchmarks.

In this article, we’ll dissect the details, understand what makes these models unique, and explore how they might reshape the way we interact with AI. Whether you’re a tech enthusiast or just AI-curious, stick around – I’ll keep it simple and comprehensive.

O3 and O3 Mini by OpenAI

The recent announcement of OpenAI’s O3 and O3 Mini models, after the release of the o1 Pro mode, is making waves in the AI space. These new reasoning models revealed at the end of OpenAI’s 12-day event, bring fresh AI reasoning and performance benchmarks.

I find this announcement fascinating for several reasons. The o3 model seems designed for complex, high-stakes tasks, while the o3 Mini, a less compute-consuming model, is positioned as the more budget-friendly option – a smart move to cater to different audiences.

While these advanced reasoning models have not been released for public use yet, OpenAI invited researchers to test these models. So, if you’re interested in being included in the first ones to use these models, join the waitlist using this link.

According to OpenAI, it wants to ensure the safety concerns and improve these models before their final release. “Here’s what we’ve built; now help us make it better,” CEO Sam Altman said.”

The names of these models also caught my attention. Skipping “O2” for “O3” might seem like a small detail, but it’s a clever nod to the creative quirks often seen in tech naming conventions. Whether it’s a reference to uniqueness or a tip of the hat to tradition, it adds a layer of intrigue to the launch.

However, some say the new model is not named as O2 because the name is already taken by the British telecom provider O2.

What Makes the o3 Models Stand Out?

O3 is the powerhouse – a model tailored for advanced reasoning tasks, boasting impressive benchmarks that we’ll dive into shortly.

O3 Mini is a comparatively cost-effective yet equally promising variant, perfect for developers and businesses looking for affordability without compromising too much on performance.

Mini models by OpenAI are not only less expensive but also fast just as GPT-4o mini, available at ChatGPT Free Online, is faster than GPT-4o.

Breaking Barriers with Performance of O3

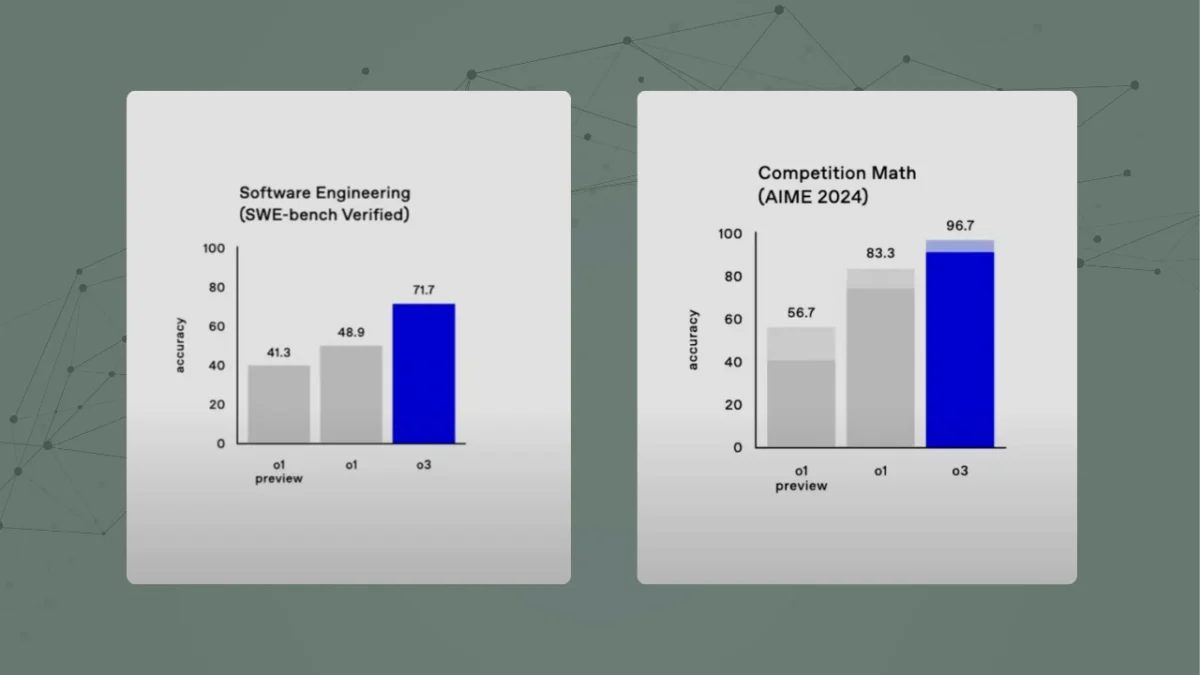

When it comes to performance benchmarks, o3 seems poised to raise the bar for what AI can achieve. The numbers are impressive – 71.7% accuracy on real-world coding tasks and a jaw-dropping 96.7% accuracy on the American Mathematics Competition (AMC). These aren’t just incremental improvements; they’re leaps that demand attention.

But what really stands out is its performance in challenges like the ARC AGI Benchmark. If you’re unfamiliar, this test measures how well AI models adapt to learning new tasks, mimicking human-like generalization abilities. The newly announced model has not only surpassed human performance but also set a new standard in the field.

These results point to a future where artificial intelligence might genuinely rival human expertise in niche domains.

Why These Benchmarks Matter

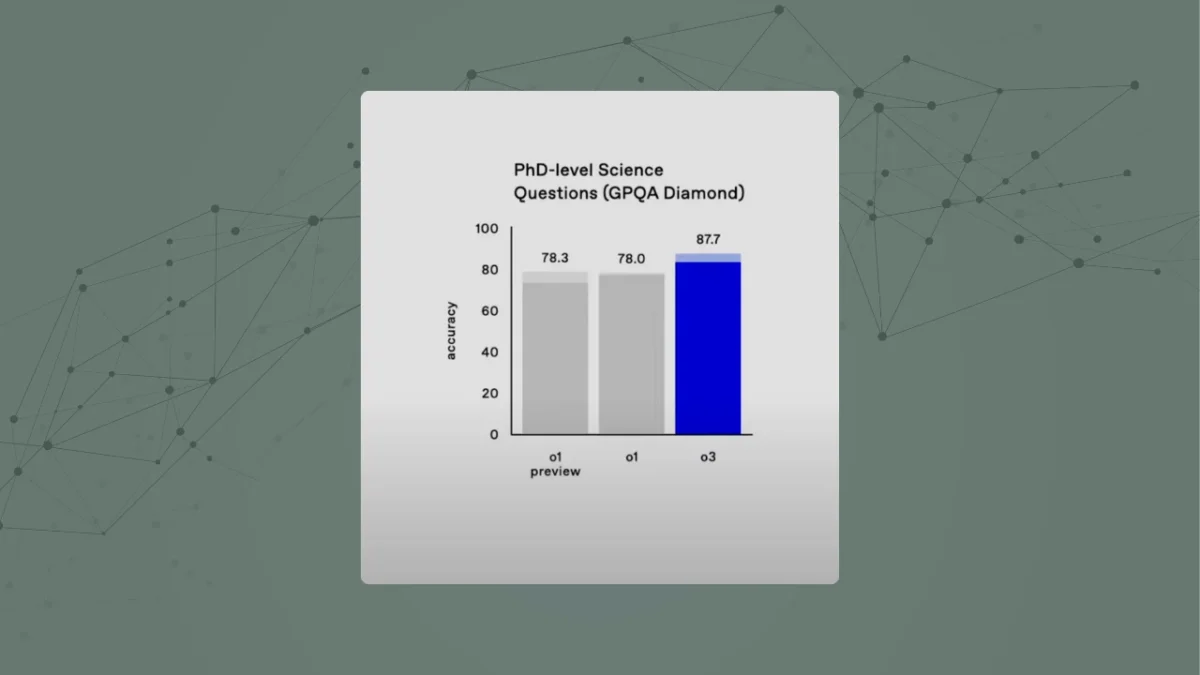

Think about it: scoring 87.7% on PhD-level science questions isn’t just a technical flex – it’s a practical demonstration of AI’s potential in solving real-world, high-stakes problems. Researchers using AI assistant, powered by O3, in physics or genetics can help them push boundaries faster than ever before.

Another important highlight is the Epic AI’s Frontier Math Benchmark, where this new model achieved 25% accuracy. Now, that might sound low, but when you consider that other AI models barely break 2%, you realize how groundbreaking this result is. This benchmark tests some of the toughest problems AI has faced to date, so even small percentages mark significant progress.

O3 Can Code Like a Pro

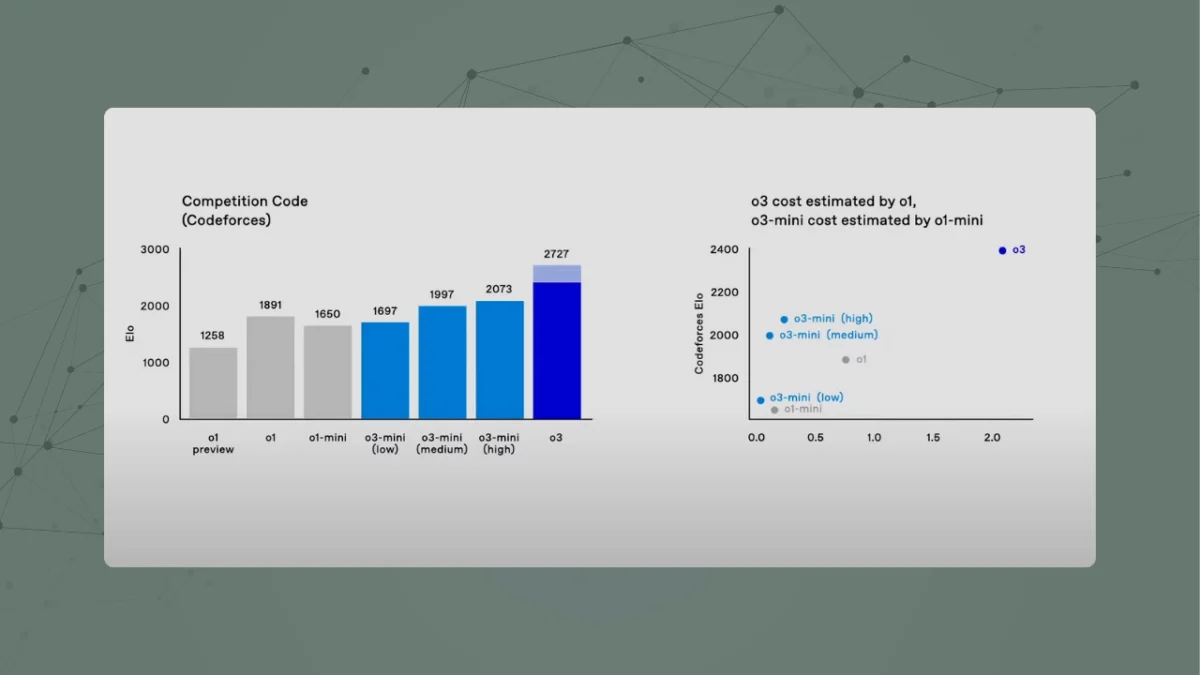

On competitive coding tasks, O3 is performing at an ELO rating of 2727 – basically, it’s competing at the level of the best programmers out there. For anyone in the tech space, this opens up intriguing possibilities.

Could this reasoning model eventually handle debugging or even automate sections of complex software development projects?

O3 Mini’s Performance

Although these upcoming AI models of OpenAI, ChatGPT-owner company, are ultra-advanced reasoning models, they’re highly expensive. The mini version uses less compute than the o3 reasoning model consumes, so comparatively less expensive which results in its accessibility and practicality.

While O3 is the star performer, O3 Mini brings advanced reasoning capabilities to the table, designed to strike a balance between performance and cost.

A Smarter Way to Think

One of the key innovations here is the concept of adaptive reasoning. The Mini-reasoning model offers multiple modes –low, medium, and high reasoning levels – allowing it to allocate computational resources based on task complexity.

This adaptability ensures that developers and businesses can optimize both results and costs, a feature that resonates deeply in real-world applications.

For instance, during demos, O3 Mini was shown generating a Python-based code generator that could evaluate its own performance. The ability to work efficiently under various constraints makes this model an excellent choice for anyone looking to experiment with AI without breaking the bank.

Performance That Punches Above Its Weight

When compared to earlier models like o1 Mini, the recently unveiled Mini-modal consistently outperformed in both coding and mathematics benchmarks. For competitive programming tasks, its medium reasoning mode proved superior. Its high reasoning mode, on the other hand, matched the sophistication of more resource-intensive models like o3.

Developer-Friendly Features of o3 Mini

The Mini reasoning model isn’t just about raw capability; it’s also about usability. Enhanced API functionalities such as structured outputs, function calling, and developer messages make it an excellent tool for software professionals.

The model offers an accessible pathway for developers to integrate advanced AI into their workflows without incurring the full cost of high-end models.

OpenAI’s Collaboration with ARC Prize Foundation

OpenAI’s collaboration with the ARC Prize Foundation focuses on creating accurate benchmarks for evaluating artificial intelligence. Benchmarks have always been significant in measuring progress, but OpenAI and ARC aim to push these standards further, especially as AI systems become more advanced and complex.

Here’s a closer look at what this partnership entails and its implications for the future of AI.

The ARC Prize Foundation is a leader in designing benchmarks that assess AI’s ability to adapt, reason, and learn. Their work underpins many of the tests that developers rely on to gauge AI progress. The foundation’s partnership with OpenAI ensures that benchmarks evolve in line with the capabilities of the latest models, such as O3 and O3 Mini.

One standout benchmark developed by ARC is the ARC AGI Benchmark, which evaluates how well an AI system can learn new skills in a zero-shot setting. For O3, this benchmark highlighted its extraordinary ability to handle unseen tasks, surpassing human performance.

Such benchmarks are invaluable for distinguishing between models that excel at narrow, predefined tasks and those inching closer to general intelligence.

The OpenAI-ARC collaboration is set to introduce new benchmarks in 2025. These upcoming tests aim to address gaps in current evaluations and challenge models in novel ways. For example:

– Simulating real-world decision-making scenarios.

– Testing adaptability in chaotic, unpredictable environments.

– Measuring long-term learning capabilities.

These benchmarks will not only help refine the recently announced models but also shape future generations of AI.

Since the unveiling of OpenAI’s o3 models, there is a hot debate on whether O3 is dawn of AGI or a lie of ARC-AGI benchmark. I’ve critically analyzed both sides of this polarizing debate to better understand where we stand on the path to AGI.

Albert Haley

Albert Haley, the enthusiastic author and visionary behind ChatGPT 4 Online, is deeply fueled by his love for everything related to artificial intelligence (AI). Possessing a unique talent for simplifying complex AI concepts, he is devoted to helping readers of varying expertise levels, whether newcomers or seasoned professionals, in navigating the fascinating realm of AI. Albert ensures that readers consistently have access to the latest and most pertinent AI updates, tools, and valuable insights. Author Bio