Prompt Engineering: A Practical Guide with 5 Case Studies

Are you struggling with understanding and practicing the complex process of prompt engineering? You don’t need to worry any further. I have composed this very practical and easy-to-understand guide that covers almost every aspect of prompt engineering.

This article helps you explore how prompting and language models work and link to each other, and learn 9 strategies for composing customized prompts with illustrations of effective prompt customization examples. 5 case studies of prompt engineering are also part of this guide to demonstrate the impact of optimal prompt designs on language model performance and outcomes.

Great! Isn’t it?

What is Prompt Engineering?

Prompt engineering is a must-learn skill in this age of artificial intelligence (AI). It is a deliberate and strategic crafting of input queries, statements, or instructions provided to language models to influence their output in a desired manner.

It involves fine-tuning and tailoring the wording, structure, and context of the prompt to elicit accurate, contextually relevant, or task-specific responses from the language model.

Prompt engineering is a pivotal aspect of NLP that allows you to wield greater control over language models, tailoring their behavior to meet specific requirements and objectives, and thus advancing the field of natural language processing.

By carefully designing prompts, we guide language models to produce more accurate and relevant responses, improving the overall performance of NLP systems. Prompt engineering allows customization of language models for specific tasks, enabling them to perform a wide range of tasks accurately, from language translation to question-answering and text summarization.

Moreover, tailoring prompts helps language models generate content that aligns with particular domains, ensuring that the generated text is appropriate and relevant to the intended context. Strategic prompt engineering is also used to mitigate biases in language models by guiding them towards producing unbiased and fair responses, promoting the ethical use of NLP technologies.

Well-structured prompts save time and resources by guiding models to generate desired output without the need for complex post-processing or extensive filtering of the generated text. By optimizing prompts, we enhance the conversational abilities of language models, making them more adept at engaging in human-like dialogues and interactions.

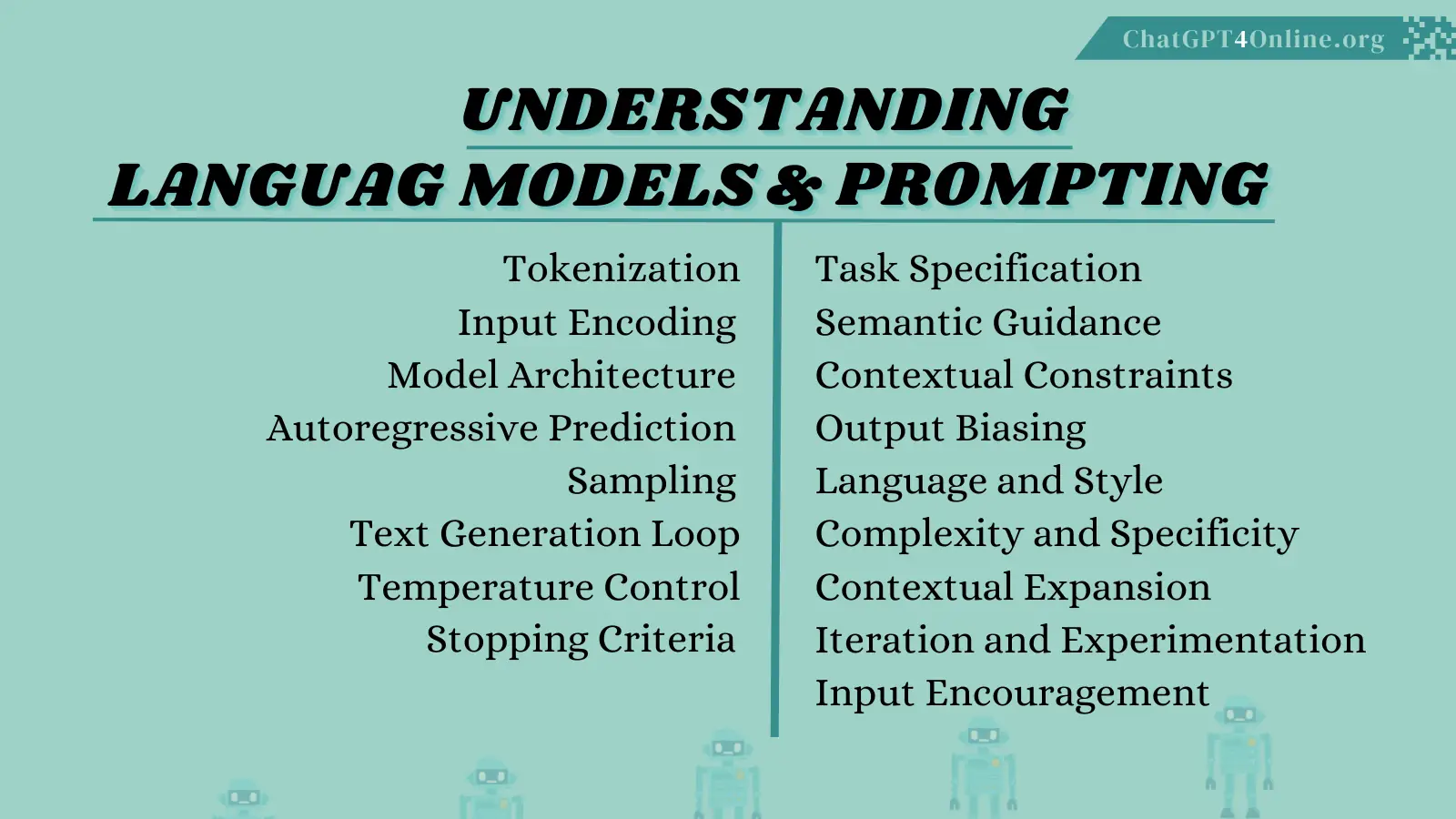

Understanding Language Models & Prompting

To master the skill of prompt engineering, you need to understand the relationship between language models and prompting. It’s important to know how they impact each other and how they actually work.

How Do Language Models Generate Text?

Language models generate text through a process known as auto-regression, where they predict the next word or token in a sequence based on the preceding words. They use tokenization, input encoding, autoregressive prediction, and sampling techniques to generate text by predicting the next token based on the preceding context.

The process is iterative, with each prediction influencing the subsequent ones until the desired amount of text is generated. Let’s explore the 8 basic key steps of this process a bit more.

1- Tokenization

The input text is tokenized, breaking it down into smaller units such as words, sub-words, or characters. Each token typically corresponds to a single word or a sub-word fragment.

2- Input Encoding

The tokens are encoded into numerical representations using embeddings or other encoding techniques. This numerical representation serves as the input to the model.

3- Model Architecture

The model, often a neural network such as a transformer-based architecture, processes the encoded input sequentially, considering one token at a time. The model learns weights and parameters that enable it to predict the next token in the sequence.

4- Autoregressive Prediction

The model begins generating text by providing an initial prompt or seed, usually a few tokens long. It then predicts the next token in the sequence based on the provided context.

5- Sampling

The model samples the predicted token using a probability distribution generated by its predictions. The token with the highest probability or a stochastically chosen token based on the distribution is selected as the next token.

6- Text Generation Loop

The generated token is added to the input sequence, and the process iterates to predict the next token in the sequence based on the updated context. This loop continues until a specified length or stopping condition is reached.

7- Temperature Control

The temperature parameter in the sampling process controls the randomness of token selection. Higher temperature values increase randomness, leading to more diverse output, while lower values make the output more focused and deterministic.

8- Stopping Criteria

Text generation is stopped based on a predefined length, a specific token indicating the end of the text, or other criteria, ensuring the generated text meets the desired length and format.

How Do Prompts Influence the Output of Language Models?

Prompts are crucial in shaping the output of language models by providing the initial context and instruction that guide the model’s generation process. They act as a guiding mechanism, shaping the behavior of language models and influencing the generated output to align with the desired task, context, style, or perspective.

So, effective prompt engineering is essential to harness the power of language models and obtain meaningful, relevant, and tailored responses. Let’s discover 9 ways of how prompts influence the output of language models.

1- Task Specification

The prompt sets the task or objective for the language model. It informs the model about the desired outcome, whether it’s translation, summarization, question-answering, sentiment analysis, or any other task.

2- Semantic Guidance

The wording, structure, and context of the prompt guide the model’s understanding of the task and intended meaning. Specific keywords or phrases in the prompt direct the model to generate content related to those keywords.

3- Contextual Constraints

You can introduce constraints or parameters in your prompt that guide the output. For example, specifying the output in a particular style, tone, or domain, ensures the generated text aligns with predefined criteria or requirements.

4- Output Biasing

You can use prompts to bias the model’s output towards certain perspectives, opinions, or sentiments. By framing the prompt carefully, you steer the model to generate responses that align with specific viewpoints.

5- Complexity and Specificity

The complexity, specificity, and length of the prompt influence the generated output. A more detailed or complex prompt may result in a longer and more detailed response, while a concise prompt may yield a succinct reply.

6- Language and Style

The language used in the prompt guides the language model to generate text in a particular style or register, whether formal, casual, technical, or creative.

7- Contextual Expansion

Elaborative prompts provide additional context or background information, helping the model generate more informed and contextually relevant responses.

8- Iteration and Experimentation

Through iterative experimentation with prompts, you can refine and adjust the wording, structure, and context to achieve the desired output. Small modifications in the prompt lead to substantial changes in the generated content.

9- Input Encouragement

Phrasing the prompt as a request or encouragement guides the model to produce a response that fulfills that request, encouraging the model to follow the prompt more closely.

You can also utilize our tried and tested ChatGPT Prompts customized specifically for certain fields to practice your skill of PROMPT ENGINEERING at ChatGPT 4 online.

The Art of Effective Prompt Engineering

To learn the art of effective prompt engineering, you need to know and explore certain strategies for composing customized prompts. Customization of prompts is quite an essential part of the procedure of prompt engineering.

So, to enable yourself to craft tailored and customized prompts, you must keep in mind the following strategies while doing effective prompt engineering.

Strategies For Crafting Tailored Prompts

As you have come to know, crafting effective and tailored prompts is a key aspect of prompt engineering which ensures that language models generate accurate and relevant responses for specific tasks.

Creating such prompts involves a strategic combination of understanding the task, specifying task-related keywords, providing clear instructions, incorporating contextual information, and fine-tuning the prompt based on iterative testing and feedback, ensuring optimal performance for various NLP tasks.

Let’s explore briefly what these 9 strategies for crafting tailored or customized prompts revolve around.

Understanding Task Requirements

Be Clear and Direct

Include Task Keywords

Specify the Desired Format

Provide Contextual Information

Balance Length and Relevance

Test and Iterate

Utilize Domain Knowledge

Tailor to the Model’s Behavior

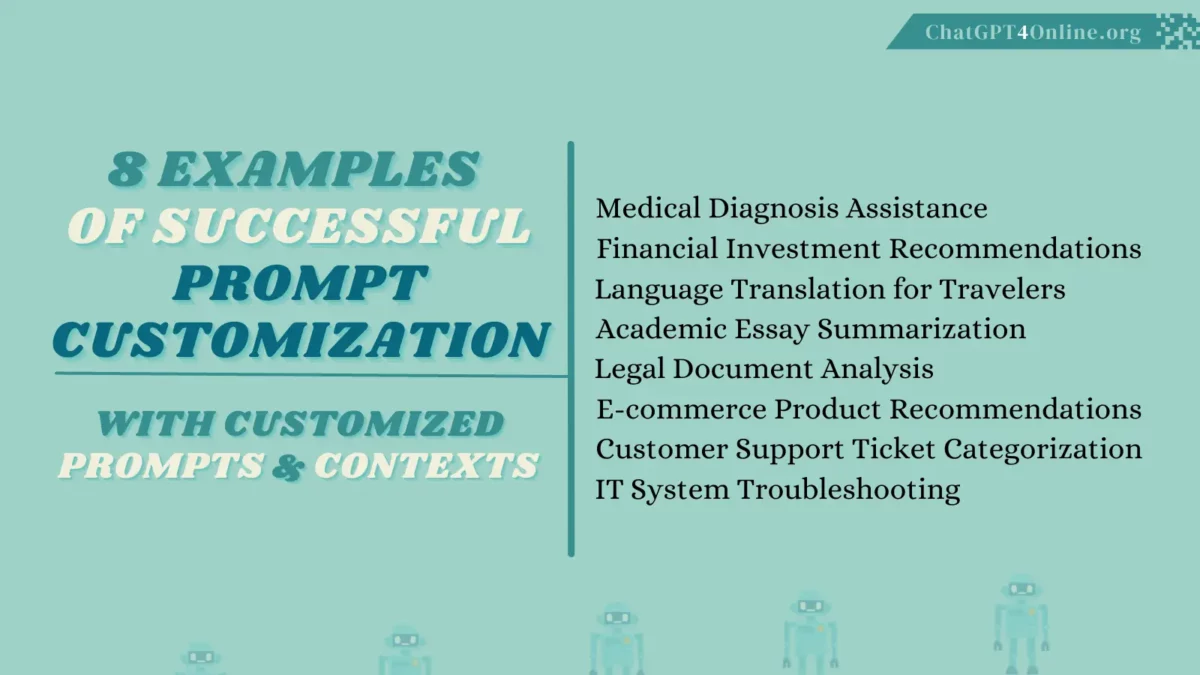

Examples of Successful Prompt Customization

Here are 8 examples illustrating how prompt customization has been applied in different domains to improve task performance. These examples showcase the versatility of prompt customization across various domains, illustrating how well-defined, task-specific prompts can guide language models to generate contextually appropriate and accurate responses.

The customization process ensures that the model’s output aligns with the intended task, enhancing its utility in diverse real-world applications.

1- Medical Diagnosis Assistance

Customized Prompt: “Given the patient’s symptoms and medical history, provide a comprehensive diagnosis with potential treatment options.”

Context: Tailoring the prompt with specific symptoms and patient details for a language model to generate a diagnosis and treatment options based on medical knowledge.

2- Financial Investment Recommendations

Customized Prompt: “Analyze the current market trends and risk tolerance for a 30-year-old investor seeking high-yield, long-term investments. Suggest a diversified portfolio.”

Context: Crafting a task-specific prompt to guide the model to generate investment recommendations aligned with a particular investor’s profile and objectives.

3- Language Translation for Travelers

Customized Prompt: “Translate the following English phrases into Spanish for a traveller in Spain: ‘Where is the nearest train station?’ and ‘I need a taxi.'”

Context: Specifying the task and context within the prompt to ensure accurate translation for a traveller in a specific region.

4- Academic Essay Summarization

Customized Prompt: “Summarize the given academic essay on climate change into a clear and concise 200-word summary.”

Context: Customizing the prompt to guide the model to provide a precise summary of a lengthy academic essay on a specific topic.

5- Legal Document Analysis

Customized Prompt: “Analyze the provided legal contract and highlight clauses related to termination and liabilities.”

Context: Focusing the model’s attention on specific clauses within a legal document for analysis, facilitating legal professionals in their work.

6- E-commerce Product Recommendations

Customized Prompt: “Based on the user’s browsing history and preferences, suggest personalized product recommendations in the categories of electronics and fashion.”

Context: Customizing the prompt to guide the model to provide tailored product recommendations aligned with a user’s interests and browsing behavior.

7- Customer Support Ticket Categorization

Customized Prompt: “Categorize the customer support tickets into ‘Billing Issues’, ‘Technical Support’, ‘Product Feedback’, and ‘General Inquiries’ based on the ticket description.”

Context: Tailoring the prompt for automated ticket categorization, enhancing the efficiency of customer support systems.

8- IT System Troubleshooting

Customized Prompt: “Diagnose and troubleshoot a network connectivity issue in a corporate environment, focusing on potential router configurations and firewall settings.”

Context: Crafting a task-specific prompt for IT professionals to guide the model in troubleshooting a network issue effectively.

I’ve composed a list of 50 ChatGPT Copywriting prompts. You can try that to practice your prompt engineering skills.

How Does Optimal Prompt Design Lead to Significant Outcomes?

Numerous studies and practical applications have demonstrated the impact of well-crafted prompts on language model performance and outcomes. Here are 5 notable Case Studies showcasing the influence of optimal prompt design:

In each of the following cases, the success and effectiveness of the language models are directly influenced by the design of the prompts. Optimal prompt engineering ensures that the models generate responses that align with the desired outcomes and tasks, showcasing the importance of carefully crafting prompts to achieve specific objectives across various domains.

While success stories may not be attributed solely to prompt design in the process of prompt engineering, the following examples highlight how well-structured and task-specific prompts significantly impact the performance and utility of language models in diverse applications, ultimately leading to improved outcomes and value generation.

1- GPT for Creative Writing

In the field of creative writing, the application of the OpenAI GPT-3 and GPT-4 models has been a significant breakthrough. GPT-3 and 4 are the most powerful language models developed to date. They have showcased their potential in aiding and enhancing creative writing tasks.

The GPT models can generate coherent and contextually appropriate text, making them quite valuable tools for writers seeking inspiration, plot ideas, dialogue suggestions, or creative content generation.

– Outcome

The utilization of GPT-3 and 4 for creative writing tasks has yielded noteworthy outcomes. Writers and creative professionals have leveraged the model to assist them in various aspects of their work, including:

Idea Generation

GPT models assist in brainstorming and ideation, providing a wide range of creative ideas and concepts that can be further developed into engaging narratives or storylines.

Plot Development

Writers use GPT models to suggest plot twists, story arcs, or pivotal events, helping to structure the narrative and maintain reader engagement.

Character Creation

The models aid in character development by suggesting traits, backgrounds, motivations, and complexities, enriching the depth and believability of the characters within a story.

Dialogues and Conversations

GPT models help generate natural and engaging dialogues, enabling writers to create compelling interactions between characters.

Descriptive Writing

Writers use the model to enhance descriptive elements in their writing, enriching the imagery and sensory experience for the readers.

– Role of Optimal Prompt Design

Optimal prompt design is a critical factor in achieving the desired creative outcomes using GPT-3 and 4. Crafting effective prompts is an art that involves providing the right context and guidance to the model, aligning with the specific creative objective. Here’s how optimal prompt design plays a pivotal role in the process of prompt engineering:

Clear Articulation of Creative Objective

Crafting prompts that clearly articulate the creative task or objective, such as “Create an engaging opening paragraph for a suspense thriller,“ helps guide the model in generating appropriate and contextually aligned creative content.

Emphasis on Tone and Style

Specifying the desired tone, style, or mood in the prompt, such as “Write a romantic scene with a melancholic ambiance,“ provides the model with essential cues to tailor the output accordingly.

Contextual Constraints

Providing contextual constraints within the prompt, like specifying a particular setting, time period, or character attributes, guides the model to generate content within the defined boundaries, enhancing relevance and coherence.

Interactive Prompting for Iterative Refinement

Utilizing interactive prompting techniques, where the model responds to incremental feedback, allows for iterative refinement of the creative content, fine-tuning it to align with the writer’s vision.

Engagement-Driven Prompts

Designing prompts that stimulate the model’s creativity, such as asking for unexpected plot twists or surprising character developments, encourages the generation of engaging and unpredictable creative elements.

By carefully structuring prompts that encapsulate the creative context and goals, writers harness the potential of GPT models to elevate their creative writing, infusing originality, intrigue, and depth into their literary efforts. Optimal prompt design is the gateway to unlocking the creative capabilities of GPT-3 & 4 and leveraging it effectively in the world of creative expression.

Here’s a list of 50 customized ChatGPT prompts for writing a poem, you can try these prompts to practice your prompt engineering skill for your creative writing tasks.

2- Medical Diagnosis Assistance

The integration of language models in medical diagnosis assistance has shown promising potential. Language models are utilized to aid healthcare professionals by suggesting potential diagnoses based on provided medical symptoms. This application holds significant promise in streamlining the diagnostic process, enhancing accuracy, and ultimately improving healthcare decision-making.

– Outcome

Utilizing language models for medical diagnosis assistance has led to several significant outcomes in the healthcare domain:

Symptom Analysis

Language models effectively process and analyze detailed medical symptoms provided by patients, assisting healthcare professionals in understanding the patient’s condition comprehensively.

Diagnosis Suggestions

By leveraging the symptoms provided, GPT models can suggest a range of potential diagnoses or medical conditions that align with the symptoms, aiding doctors in considering a broader spectrum of possibilities during diagnosis.

Decision Support for Healthcare Professionals

GPT’s diagnostic suggestions serve as a valuable decision support tool for healthcare professionals, helping them cross-verify their own diagnostic insights and consider a more diverse set of potential diagnoses.

Efficient Triage

Language models contribute to efficient triage processes, enabling faster identification and prioritization of critical cases based on symptoms and suggested diagnoses.

– Role of Optimal Prompt Design

The role of optimal prompt design is critical in achieving accurate and precise diagnostic suggestions. Crafting effective prompts that accurately describe medical symptoms and conditions is fundamental in guiding the language model to generate appropriate and meaningful diagnostic insights. Here’s how optimal prompt design significantly contributes to the process of prompt engineering:

Clear and Detailed Symptom Description

Designing prompts that provide a clear and detailed description of the patient’s symptoms ensures that the model has accurate information to base its diagnostic suggestions on.

Relevant Medical History Inclusion

Incorporating relevant medical history within the prompts helps contextualize the symptoms and improves the accuracy of diagnostic suggestions by considering the patient’s health background.

Structured Presentation of Information

Presenting symptoms in a structured and organized manner within the prompt facilitates better understanding and analysis by the language model, resulting in more accurate and relevant diagnoses.

Contextual Cues for Differential Diagnosis

Providing contextual cues within the prompt about the nature and duration of symptoms assists the model in generating more targeted and precise differential diagnoses.

Feedback Loop for Refinement

Employing an interactive prompting approach allows for feedback from healthcare professionals on the generated diagnostic suggestions, enabling iterative refinement and improvement of the model’s diagnostic accuracy over time.

By carefully crafting prompts that encapsulate precise symptom descriptions and relevant medical context, optimal prompt design ensures that the model generates insightful and accurate diagnostic suggestions.

This approach is revolutionizing the medical diagnostic process, offering valuable decision support to healthcare professionals and ultimately enhancing patient care and outcomes.

3- Automated Code Generation

The use of language models for automated code generation has emerged as a groundbreaking application, offering a potential paradigm shift in software development. By leveraging the natural language processing capabilities of GPT models, developers now articulate their requirements in plain language, and the model generates corresponding code snippets or even complete pieces of software. This innovation streamlines the coding process, making it more intuitive and efficient.

– Outcome

The outcome of employing language models for automated code generation has been transformative in the field of software development, resulting in several notable achievements:

Enhanced Productivity

Developers generate code more rapidly and efficiently by simply describing the desired functionality, reducing the time and effort required for manual coding.

Rapid Prototyping

GPT models facilitate quick prototyping by converting high-level ideas or concepts into functional code, enabling rapid validation and iteration of software ideas.

Reduced Coding Errors

Automated code generation based on optimal prompts helps minimize coding errors by accurately translating human-readable descriptions into executable code.

Learning and Skill Development

Developers learn and improve their coding skills by studying the generated code, and gaining insights into effective programming practices and methodologies.

– Role of Optimal Prompt Design

Optimal prompt design plays a pivotal role in achieving accurate and efficient automated code generation. Crafting effective prompts that clearly specify the desired functionality, logic, or requirements is essential in guiding the language model to generate precise and relevant code snippets. Here’s how the role of optimal prompt design is significant in this context of prompt engineering:

Detailed Functional Description

Designing prompts that provide a comprehensive and detailed description of the desired functionality, input-output specifications, and any constraints is crucial to guide the model in generating accurate and relevant code.

Incorporating Logical Constraints

Including logical constraints within the prompt, such as input validation rules or algorithmic constraints, ensures that the generated code adheres to the specified logic and requirements.

Request for Specific Code Constructs

Prompting the model to generate code constructs or patterns (e.g., loops, conditionals, functions) specific to the desired functionality guides the model in crafting well-structured and efficient code.

Considering Target Programming Language

Specifying the target programming language or platform within the prompt helps in generating code snippets that are compatible and optimized for the intended environment.

Utilizing Interactive Prompting

Employing an interactive prompting approach allows developers to iteratively refine the generated code by providing feedback and requesting modifications, resulting in code that aligns more accurately with the desired functionality.

By meticulously crafting prompts that encapsulate the required functionality and logic, optimal prompt design ensures that the generated code is precise, efficient, and aligned with the developer’s intentions.

This approach revolutionizes the software development process, making it more accessible and empowering developers to translate their ideas into functional code swiftly and accurately.

4- Sentiment Analysis for Market Research

The integration of sentiment analysis using language models in market research has proven to be a powerful tool for businesses seeking to understand customer sentiments, feedback, and market trends. Sentiment analysis involves evaluating and interpreting customer reviews, social media interactions, and other text data to gauge attitudes, emotions, and opinions toward a product or service. Optimal prompt design is a key factor in harnessing the capabilities of language models to perform sentiment analysis effectively.

– Outcome

Leveraging sentiment analysis for market research has resulted in several significant outcomes for businesses and industries:

Customer Feedback Analysis

Sentiment analysis helps businesses analyze customer feedback in an organized and efficient manner, providing insights into how customers perceive their products or services.

Identifying Strengths and Weaknesses

Analyzing sentiment allows businesses to identify both positive aspects (strengths) and negative aspects (weaknesses) of their products or services, aiding in strategic decision-making and improvements.

Market Trend Insights

By aggregating sentiment across a large number of reviews or social media posts, businesses identify emerging market trends and consumer preferences, enabling timely adjustments to marketing strategies.

Competitor Analysis

Comparing sentiment analysis results for their products with those of competitors allows businesses to gain a competitive edge by understanding their relative position and differentiating factors.

– Role of Optimal Prompt Design

Optimal prompt design is pivotal in ensuring accurate and insightful sentiment analysis for market research purposes. Designing effective prompts that direct the model to analyze and summarize customer reviews is fundamental in achieving the desired outcomes. Here’s how the role of optimal prompt design is significant in this aspect of prompt engineering:

Clear and Specific Instructions

Crafting prompts with clear and specific instructions that guide the model to analyze customer sentiment, summarize feedback, and identify positive and negative sentiments ensures a focused analysis.

Targeted Product or Service Mention

Including the specific product or service within the prompt helps in directing the analysis toward the targeted aspect, allowing businesses to gather insights about the sentiment related to a particular offering.

Instructions for Sentiment Classification

Guiding the model to classify sentiment into categories (e.g., positive, negative, neutral) within the prompt helps in obtaining a structured and categorized sentiment analysis result.

Incorporating Relevant Keywords

Including keywords related to the industry, product, or service in the prompt guides the model to focus on the specific domain, enhancing the relevance and accuracy of the sentiment analysis.

Interactive Feedback Loop

Utilizing an interactive prompting approach enables businesses to iterate and refine the analysis by providing real-time feedback to the model based on initial sentiment analysis results.

By carefully constructing prompts that guide the model to analyze and summarize customer sentiment, optimal prompt design ensures that businesses can gain valuable insights for product improvements, marketing strategies, and a deeper understanding of market dynamics.

This approach enhances the effectiveness of sentiment analysis in market research, enabling informed decision-making and ultimately contributing to business growth and customer satisfaction.

5- Legal Document Analysis

Utilizing GPT language models for legal document analysis has emerged as a transformative application within the legal domain. Legal professionals utilize language models to extract critical legal clauses, relevant information, and key insights from contracts, agreements, statutes, and other legal documents.

This technology significantly streamlines legal research and aids lawyers in digesting complex legal texts more efficiently.

– Outcome

The application of language models like GPT-3 and GPT 4 for legal document analysis has yielded substantial outcomes, improving legal practitioners’ workflow and enhancing legal processes:

Efficient Information Extraction

Language models efficiently extract key legal clauses, definitions, obligations, and other essential information from lengthy legal documents, saving valuable time for legal professionals.

Legal Research Support

Automated extraction of pertinent legal provisions and insights from diverse legal texts facilitates more informed legal research, allowing lawyers to build stronger cases and make well-informed decisions.

Risk Mitigation

Accurate identification and analysis of critical clauses and potential risks within contracts assist legal teams in identifying and addressing potential legal liabilities and mitigating risks effectively.

Due Diligence Enhancement

Language models help streamline due diligence processes by swiftly extracting crucial data from contracts and agreements, contributing to thorough assessments during mergers, acquisitions, or legal audits.

– Role of Optimal Prompt Design

Optimal prompt design is crucial in achieving accurate and precise extraction of key legal clauses and information from legal documents. Crafting effective prompts that precisely describe the required legal information plays a pivotal role in guiding the language model to generate focused and accurate extractions. Here’s how optimal prompt design significantly contributes to the process:

Here’s how optimal prompt design significantly contributes to the prompt engineering process:

Clear Instructions for Extraction

Crafting prompts with clear and specific instructions that guide the model to extract particular legal clauses, provisions, or details, ensuring precise and relevant information extraction.

Contextualizing the Legal Context

Providing context within the prompt, such as the type of legal document (contract, statute, etc.) or the relevant legal area (e.g., contract law, intellectual property), guides the model to tailor the extraction accordingly.

Targeted Legal Terminology

Incorporating relevant legal terminology and phrases within the prompt ensures that the model focuses on extracting information aligned with legal language and conventions.

Interactive Iterative Refinement

Utilizing an interactive prompting approach allows legal professionals to iteratively refine the extraction by providing feedback and requesting modifications, resulting in more accurate extractions.

Incorporating Keywords and Sections

Including specific keywords, section numbers, or identifiers from the legal document within the prompt helps the model precisely locate and extract the intended information.

By carefully designing prompts that encapsulate the precise legal information sought, optimal prompt design ensures that the model generates accurate, relevant, and contextually appropriate extractions.

This approach revolutionizes legal document analysis, making it more efficient and effective for legal professionals, ultimately enhancing their productivity and the quality of legal services provided.

Final Thoughts

You’ve delved into the depths of prompt engineering, gaining valuable insights into how it plays a pivotal role in shaping the behavior and outcomes of language models. Now, it’s time to put this knowledge into action and optimize your prompt engineering strategies. Start by crafting various prompts for specific tasks or domains you are interested in. Experiment with different styles, lengths, and structures to understand how they influence model outputs.

Moreover, identify tasks or projects where language models can assist you. Tailor prompts specifically for these tasks, incorporating task-related keywords and explicit instructions for the desired output. Fine-tune your prompts iteratively, considering the nuances of your task. Test and modify your prompts based on the model’s responses to achieve the most accurate and relevant outcomes.

Your journey in prompt engineering has just begun. The knowledge you’ve acquired is a powerful tool to transform your interactions with language models and achieve remarkable outcomes in diverse applications. Embrace the creative possibilities and let your prompt engineering strategies flourish!

Albert Haley

Albert Haley, the enthusiastic author and visionary behind ChatGPT4Online, is deeply fueled by his love for everything related to artificial intelligence (AI). Possessing a unique talent for simplifying intricate AI concepts, he is devoted to helping readers of varying expertise levels, whether they are newcomers or seasoned professionals, in navigating the fascinating realm of AI. Albert ensures that readers consistently have access to the latest and most pertinent AI updates, tools, and valuable insights. His commitment to delivering exceptional quality, precise information, and crystal-clear explanations sets his blogs apart, establishing them as a dependable and go-to resource for anyone keen on harnessing the potential of AI. Author Bio